textgrad

Automatic ''Differentiation'' via Text -- using large language models to backpropagate textual gradients.

Automatic ''Differentiation'' via Text -- using large language models to backpropagate textual gradients.

To install this package, run one of the following:

An autograd engine -- for textual gradients!

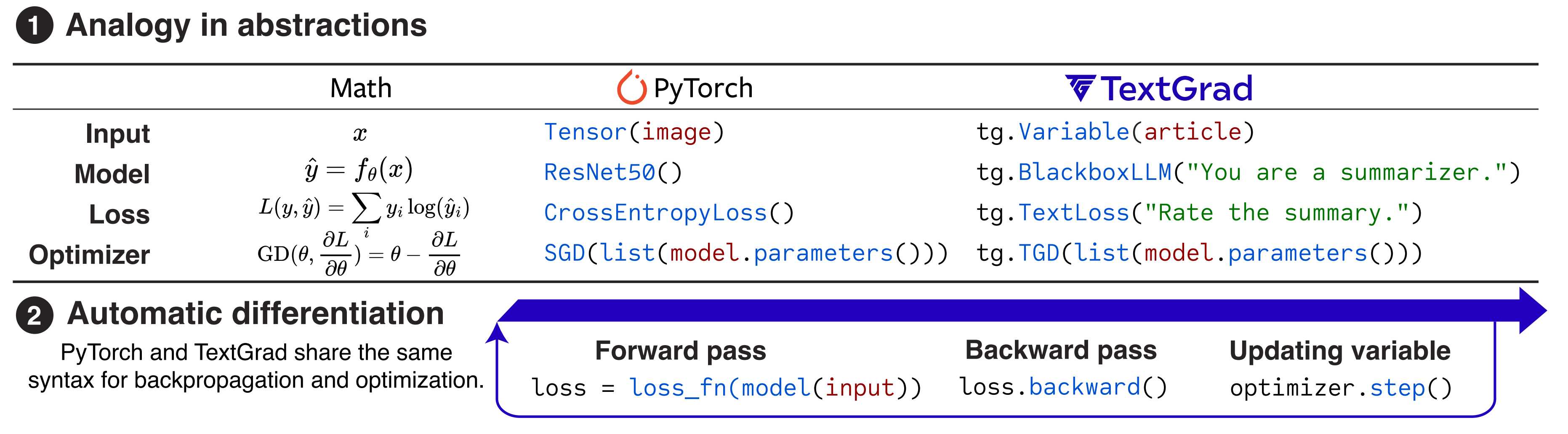

TextGrad is a powerful framework building automatic ``differentiation'' via text. TextGrad implements backpropagation through text feedback provided by LLMs, strongly building on the gradient metaphor

We provide a simple and intuitive API that allows you to define your own loss functions and optimize them using text feedback. This API is similar to the Pytorch API, making it simple to adapt to your usecases.

:fire: The conda-forge recipe was generated with Conda-Forger App.

Summary

Automatic ''Differentiation'' via Text -- using large language models to backpropagate textual gradients.

Last Updated

Jun 18, 2024 at 07:26

License

MIT

Total Downloads

1.7K

Version Downloads

1.7K

Supported Platforms

GitHub Repository

https://github.com/zou-group/textgradDocumentation

http://textgrad.com/